Multilayer Perceptron (MLP)

In this project, a category classification in the MNIST database was carried out by a fully connected multilayer network of the perceptron type (MLP).

This will consist of loading the database and separating it into training and test sets. A model of dense networks or a fully connected multilayer network of the perceptron type (MLD) will be built. The model will be trained with the training and validation data. Once trained, it will be tested against the test data to create predictions that will be compared to the objective data. Tensorflow and sklearn will be used to do the above.

Dataset MNIST

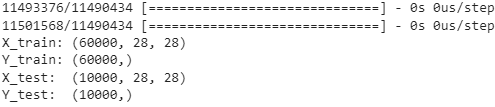

The public database MNIST [1] consists of 70,000 data, of which are already divided into 60,000 for training and 10,000 for testing. This data is a set of real numbers (0 to 9) handwritten by a human that is in a 28 x 28 grayscale format.

Data preparation

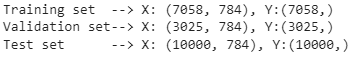

From the database, the training and test sets are downloaded to later be resized and normalized. Then the training set is divided into test and validation sets, in order to verify the behavior of the network. The separation of the data was done under the 60,20,20 rule.

Download data in training and test set

Resizing

Normalization

Splitting data based on Rule 60,20,20

Data preparation

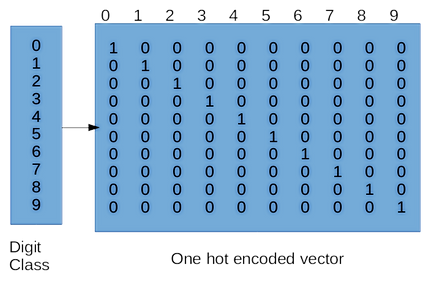

One-hot encoding

'one-hot' encoding is a tool that will allow us to value positions according to their numerical value. Each position of the coding being a neuron that will give a probability to that position or the numerical value; in such a way that of the 10 neurons (in this case) only one will have the highest probability and that will be the prediction.

Creating dense neural network (MLP)

This dense or fully connected perceptron-type network was created sequentially and with sigmoid and softmax activation functions to give values to the output of each neuron and to the final neurons to decode their position and thus give the numerical value predicted by the network.

Training the model

The training of the model counts on assigning certain hyperparameters that make the network learn in a better or worse way, this training was carried out by periods and only uses the training and validation sets. These two sets are used to compare them with each other and see the behavior of the network.

Setting hyperparameters

Results

Once the model has been trained, it is evaluated with the corresponding data sets, so that the model has an accuracy of 0.809 or 80.9%, which is a good value. And in the following figure you can see an image taken randomly from the test set along with its prediction.

For more details, you can see the report here.